Welcome

My name is Stephen Moss, and I've been tasked with providing an interesing website that will be worth your time. As such, I am bringing

you what I consider far and above the most fascinating topic known to man. In 2014 and 2015, I created a thesis project for my

Bachelor's of Arts in Linguistics at The University of Maryland, College Park. Every

word, image and video token was created by me around eight years ago (except where cited). Find my abstract below and begin to dive in

at the research introduction.

Categorical Perception (CP) is a phenomenon in which an individual's perceptual system codifies

continuous stimuli in discrete categories. There are two criteria by which CP is identified. The first is the ability of observers to separate

the stimuli along a continuum as members of distinct categories. Within such categories, if two stimuli are presented to the observer has a

lower ability to discriminate between the two if they are within the same category than if the stimuli have a category boundary between them.[6,

7]. Though this has been demonstrated in non-linguistic contexts such as facial expressions[

3, 10], color perception[10]

, and novel objects[5], this is most well documented in speech perception. A limited number

of studies have been carried out investigating CP of American Sign Language (ASL)[

1, 2, 4, 8,

9]. These studies have revealed that handshape continua display evidence of CP [

1, 4, 8, 9]

, but location continua do not[4].

In addition to handshape and location, ASL signs also are described by their palm orientation, movement, and non-manual signals [

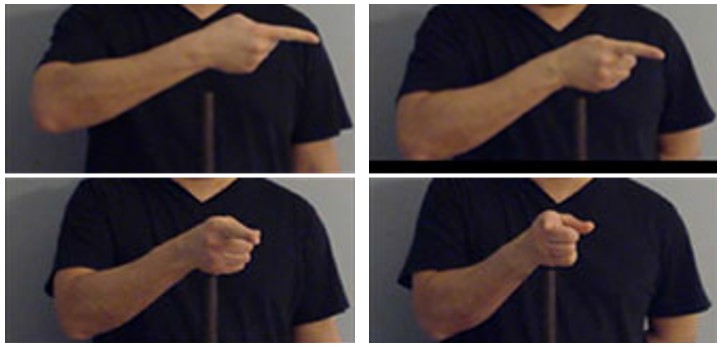

11, 12]. The stimuli in this study come from a continuum of

eleven pseudo-signs which vary across a continuum of palm orientation. Determined by context of use, signs which occur along this continuum could belong to

either two categories (fig. 1) or four categories (fig. 2). This study tested both native ASL signers and English speakers with no ASL experience.

Thus, this study crosses language experience with context in a 2x2 design.

CP is typically measured using an identification task to assess the categories of the participant and a discrimination task to measure perceptual

abilities along the continuum[1, 2, 4,

6, 7, 8]. For ASL identification

tasks, CP paradigms for identification typically take the form of ABX where A and B are exemplars of each category and X is the individual token

to be categorized[1, 2, 4,

8]. Discrimination tasks for both spoken and signed CP studies typically take the form of an AX

different-same task[1], or an ABX task[2,

4, 8] matching X to token A or B which are one or two steps apart

on the continuum.

In traditional identification tasks, the participants are forced to choose between two categories defined a priori by the research team[

1, 2, 4,

6, 7, 8]. This does not account for

conditions where categories are perceived differently than predicted by the researchers. Additionally, most studies in which this method is used,

non-native language users are typically identified as being able to identify categories distinctly, which is the first requirement for CP to be

present.[1, 2, 4,

8]. The visual mode of ASL allows more effective measurement of categories. Instead of a participant

choosing from two options, the participant has an array of eleven images from which to choose along the continuum in question.

Preliminary data suggests that native ASL users do select two distinct categories in the 2-category condition and three distinct categories—instead

of four— in the 4-category condition. The ASL naïve participants however, identify two, three, four, or five categories in the 4 category condition, and two or

three categories in the lexical condition, both much shallower than those of the native ASL participants. The lack of concurrence for these

participants may suggest ASL naïve participants do not identify categories at all. Discrimination data collected for the ASL naïve group

shows perceptual warping and no context effects. Preliminary data from the ASL native group shows perceptual warping and possible context effects.